For years, Luke and I have wanted to build fantasy hockey projections – often fueled by our desire to have them for ourselves. This year, we finally found time to build a projection system for the standard fantasy hockey categories in most leagues. All fantasy projections can now be found here. For a very quick guide to using the tool/page, please reference the User Guide. Let’s cover what’s going on here and how it all works!

The projection system consists of 16 models, which produce a total 20 metrics covering skaters and goalies. There’s a lot going on here, but, for the most part, the models are all similar. It’s just a lot of code. This is the first time we here at Evolving Hockey have done anything connected to or covering fantasy hockey. For some, seeing a +/- or GAA projection on the site may feel like sacrilege (it is). But fantasy hockey is a great time. And if we’re going to do this right, we’re going to do it right damn it. Up front we need to thank both the Five Hole Fantasy Hockey Podcast and Keeping Karlsson for their help and input over the last few weeks. They were both great resources from the world of fantasy hockey that we did not previously have. In addition, we’ve referenced the r/fantasyhockey subreddit a ton over the last few months. The fantasy hockey community overall has been fantastic. Alright, let’s get into it.

The Metrics

The projections available include the following:

Skaters

- Games Played (GP)

- Average Time on Ice (ATOI)

- Goals (G)

- Assists (A)

- Shots on Goal (SOG)

- Blocks (BLK)

- Hits (HIT)

- Faceoff Wins (FW)

- Penalty Minutes (PIM)

- Plus-Minus (+/-)

- Powerplay Points (PPP)

- Shorthanded Points (SHP)

Goalies

- Game Starts (GS)

- Shots Against (SA)

- Goals Against (GA)

- Wins (W)

We also have a few additional metrics available to us with these projections than there are models – we can combine multiple metrics to produce another metric. In this case, we also have the following stats:

- Total Time On Ice (GP * ATOI)

- Saves (SA – GA)

- Save % (1 – [GA / SA])

- Goals Against Average (GA / GP)

I’ll get into this a little more below, but I found in modeling various metrics, especially for some percentages and/or ratios, it was often better and more reliable to model the underlying raw metrics that go into those metrics than it was to try and predict the result. Especially with something like save %, which, full disclosure, is one of the most unreliable and unrepeatable metrics I’ve ever had to work with. It’s also a bad metric (even for fantasy hockey). In addition, based on our research, it seems the majority of leagues often prefer the raw goalie metrics anyway. But we’ve provided the additional metrics above for consistency. However, we’ve chosen to not project game winning goals, shutouts, and overtime losses. This was due to both the extremely unreliable nature of these metrics and the overall minimal values these metrics produce. All three of these metrics are quite random – we felt it would be a little ridiculous to try and project these outcomes.

The Models

For every model I built, I relied on LightGBM. More specifically, its Gradient Boosting Decision Tree (GBDT) algorithm to predict all the various metrics. Over the years, I’ve found that various gradient boosting models perform quite well with hockey data, specifically when working with non-linear distributions within the data overall, and, in this case, when there are several categorical variables (age, draft, position, signing data, etc) that perform well as predictors. That’s not to say that I necessarily needed to use LightGBM or any gradient boosting method for this system – or at least for all the models. In a lot of these situations, linear regression, or any of its cousins (ridge regression, elastic net, MARS, etc.) would’ve likely performed quite well. Simply: there are a lot of models and a lot of code. It was easier to stick with the same algorithm to build the models. It also provided a certain consistency in the predictions that was easier to work with (and adjust, more below).

I used data from Evolving Hockey, the NHL’s player information, and contract data from Capfriendly. I tested as many features as I could from our site (GAR, xGAR, RAPM, etc.), but these “advanced” metrics were only used in a few of our models – they weren’t particularly good at predicting standard box score metrics, which I already kind of knew. The various models were trained on 2-3 years of prior data for all players, with some modifications in the current projections for players with only 1 year of prior data (Kaprizov, etc.). For the most part, there isn’t anything particularly interesting to report here. By and large, the most important predictors for a given metric were the prior years’ versions of those metrics. In testing, the models performed well overall given the limitations of some of the metrics. For example: goalies are incredibly random and hard to reliably predict, things like shorthanded points, +/-, and even goals can be very random as well. We did have to make some adjustments to the raw projections after the fact, which I cover towards the end of this write-up.

The Weights

We’ve provided options for both points and categories leagues. Categories leagues do not use weights, so let’s first discuss points leagues. We knew there were a lot of different ways to weight fantasy metrics in points leagues, but we weren’t quite ready for just how many weighting systems were out there. Everyone relies on one of the numerous default weighting systems from ESPN, Yahoo, etc. or has their own method for their own league. So, we had to figure out a method that worked in a general way to arrive at final ratings for all players (forwards, defensemen, and goalies). After testing numerous weighting systems, we landed on one suggested by Five Hole Fantasy Hockey Podcast with a slight increase in the weight we gave Saves overall (0.2 increased to 0.25). The default weights look like this:

Skaters

| Metric | Weight |

|---|---|

| Goals (G) | 3 |

| Assists (A) | 2 |

| Points (Pts) | 1 |

| Shots on Goal (SOG) | 0.15 |

| Blocks (BLK) | 0.1 |

| Hits (HIT) | 0.1 |

| Average Time on Ice (ATOI) | 1 |

| Faceoff Wins (FOW) | 0.05 |

| Penalty Minutes (PIM) | 0.25 |

| Plus-Minus (P_M) | 1 |

| Powerplay Points (PPP) | 1 |

| Shorthanded Points (SHP) | 1 |

Goalies

| Metric | Weight |

|---|---|

| Game Starts (GS) | 0.5 |

| Wins (W) | 3.5 |

| Saves (SV) | 0.2 |

| Save % (SV%) | 1 |

| Goals Against (GA) | -1.5 |

| Goals Against Average (GAA) | -1 |

Note: Metrics that are primarily used in category leagues (ATOI, SV%, GAA) are given a weight of 1 by default. If your point league uses these, please adjust accordingly.

As you will see on the page, you can adjust the weights for your league. In addition, you can also select the metrics that are applicable. The rankings, however, require a little explaining. Again, I’ll start with points leagues. As covered in our user guide linked at the top of this article, we provide two different overall ratings (FPV and FPV Ajusted) along with the positional rank (Pos Rank) for all skaters and goalies. Fantasy Points Value (FPV) is the base form: metrics selected by the user multiplied by each metrics’ respective weight. Fantasy Points Value Adjusted (FPV Adj) is the FPV value centered around the positional mean (sometimes referred to a group-mean centering); we take a given player’s FPV value and subtract the positional mean from this value. For example, the positional FPV mean for forwards is 89. We subtract this number from all forwards to “center” their value. We do this for each position, which rescales the raw FPV numbers in a way that is comparable among all positions.

In this case, we are using average here for all positions instead of replacement level. The simple reason for this is that average is easier and replacement level in this form (fantasy hockey) is a bit foreign to both of us. There may be some benefit to using replacement level for rescaling positions (i.e. ensuring the rankings make sense for all players), but for the most part, average seems to be sufficient.

Discourse, Notes, Adjustments, and Observed Scaling

As some of you may be aware, we changed the original projections that were released last Friday (September 3rd) after several days of discourse and additional research/work on our end. This is the first year we’ve done fantasy projections, and to be frank, we didn’t quite realize just what kind of world we were entering. As was pointed out to us through recent twitter discourse (never tweet), our original projections, at least concerning points overall, were lower than other projections systems out there.

Now I’d be naïve if I said I didn’t realize this when building these models. This was, in a way, by design based on the system we chose to use. We modeled raw metrics, when possible (as opposed to rate versions), and didn’t do any ad hoc or after-the-fact adjustments to any of our outputs. (Note: In the last few days, I did build a a few rate models for comparison and found them to be extremely similar, so this was not the “problem”.) Given the uncertainty of the last few seasons (the prior two shortened seasons, COVID-19, and their impact on the data structures) along with this being our first year doing these, it felt appropriate to potentially rely on a conservative approach to the system. The rankings overall were acceptable in our opinion, but the model outputs were, for the extreme high and low players, significantly pushed towards the center of their respective distributions. Basically, they were really low compared to expectation.

While this system handled the distributions within the population for every metric well (possibly better than other projection systems out there based on our research), we realized there was one major problem specific to fantasy:

Points leagues in fantasy hockey weight the raw metrics (as opposed to Z-scores, for instance). If the projected metrics are not on a similar scale to those observed in “real life”, the weighting system will not rank players in a way that is applicable to real life leagues.

There are varying degrees of uncertainty between all metrics in fantasy hockey. Because of this, our predictions for some metrics were previously more in line with the observed real-life values (shots on goal, blocks, hits, penalty minutes). However, other metrics are far more uncertain (points, goals against, +/-) – our predicted values for these metrics were not in line with the observed real-life values. Combining these metrics and weighting them led to some potentially incorrect rankings in our total value metrics (FPV).

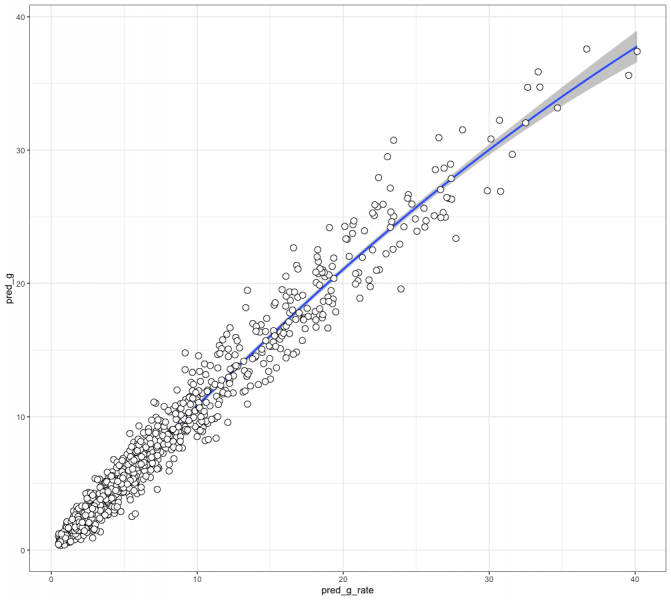

To overcome this issue, we performed something of a Z-score transformation for all metrics to rescale our projections to be in line with the observed values of the previous two seasons (’19-20 and ’20-21). For each metric, we found the Standard Deviation and Mean of both our predicted values and the observed values over the previous two years and rescaled the predictions to mirror previous observed values. This DOES NOT change the actual rankings for any player in any specific metric, it only rescales the actual predicted values to be in line with those previously observed in the two prior seasons.

Full disclosure: this is not a standard technique from what we can tell. However, this adjustment does allow us to ensure that the fantasy weights being used for the overall ranking are apples to apples with what any user should expect in their fantasy league this coming season. And it is important to remember, that the actual rankings for all players in each metric did not change because of this. In our overall FPV metrics, there are a few players who have moved around, but the overall rankings are now more in line with what should be expected in the coming year. Over the next year, we will likely spend some time working with the modeling process to see if we can find a way to address the issue of scaling instead of adjusting the model outputs themselves.

I would like to say that I still stand by our original projections and the respective models for all the individual metrics. The models do a very good job dealing with the entire population. For some of the projections, they did struggle with the extreme upper bounds, but it’s also important to remember that the predictions here are point estimates only. While we may have originally predicted McDavid at 97 points (which has been rescaled to 116), it’s likely his upper error bound was much higher than this. So, do we still stand by our original tweet stating the NHL Network’s projected point totals for all players was too high? Not really, but I still think there is room for our original opinion to be right. That’s statistics baby.

Conclusion

Our fantasy projections are live and ready to go for your fantasy hockey drafts heading into the ’21-22 NHL season. Building a fantasy projection system has been a wild ride, but it has been a major learning experience for both of us. Some “fun” discourse occurred, we got to admit we were maybe wrong, and I also had the incredibly enjoyable (dreadful) experience of building models for +/- and goalie box score metrics! It took us a while to complete these, but we’re quite happy with how everything turned out. We hope you feel the same way. Given this is our first year doing this, we would love any feedback you may have about the projections and the tool. We plan to update the projections page as the season moves along – hopefully the tool can be useful throughout the year. Who knows? Maybe we’ll make an 8-person league, draft teams entirely based on our projections, and monitor how the teams perform. Although, at this point, I think I’d be happy if I never looked at a box score again.

This is great, thank you! And I appreciate the detailed explanation on what changed since the whole Twitter thing.

Happy to provide the details!

Quick question – my categories league uses PIM as a negative (so fewest PIM wins the category). If I set the weight for PIM to -1, with all others set to 1, will that work?

Good question! Testing it out, this should work. When you tried this, did the results look “correct”?

Sorry, forgot to check back about this! I think they look “correct”; guys like Tkachuk and Marchand dropped way down the list when set to -1

Can you explain “Games Started” for Goalies? Shouldn’t the “Games Started” for a team’s goalies = 82 since there is 82 games per team this season?

And how would someone like Rask have 45 “Games Started” when his future in the NHL is unclear? This severely cripples your projections from Ullmark and Swayman.

The rankings you have on the page are great and love the simple flow of stats. Curious though is the fantasy projections going to be a daily update, weekly monthly or random update?

Really cool stuff. How often will the projections be updated? Were there any features that turned out to be good predictors that surprised you?